Somehow it seems to fill my head with ideas — only I don’t exactly know what they are!

— Lewis Carroll

Is Facebook killing our democratic way of life? If you believe the headlines, it sure is. The Guardian calls the social media giant a “Digital Gangster Destroying Democracy.” The New Yorker asks, “Can Mark Zuckerberg Fix Facebook before It Breaks Democracy?” Even Al Jazeera wants to know, “Is Facebook Ruining the World?” There are literally scores of pieces on this question, reflecting the view that, in these anxious times, the sixteen-year-old platform is a decidedly unique threat.

Three main arguments are commonly put forward to illustrate Facebook’s fecklessness: it is monopolistic, it runs roughshod over our privacy, and it is dangerously dividing people with its content, making it impossible to find the common ground on which democratic political participation — and legitimacy — depends. (Just think of your feed, if you were brave enough to look, on the evening of November 3.) Facebook is indeed guilty of the first two counts, although it is not alone. The third charge is far more complicated and far less convincing.

Despite the near-constant refrain about Facebook’s power, the company is actually the smallest of the big tech firms that are exerting so much influence on our lives (Amazon, Apple, Microsoft, and Google’s parent company, Alphabet, are the others). Still, Facebook is an enormous entity. Its 2.5 billion monthly users are constantly enlarging a massive repository of information about themselves and their friends, families, colleagues, and contacts. Facebook recognized early on, as The Economist wrote in 2017, that data is “the world’s most valuable resource.” The insights the company draws from this vast resource mean it can target individuals better than any other advertising platform in history. The result? If you advertise, you really can’t afford not to be on Facebook.

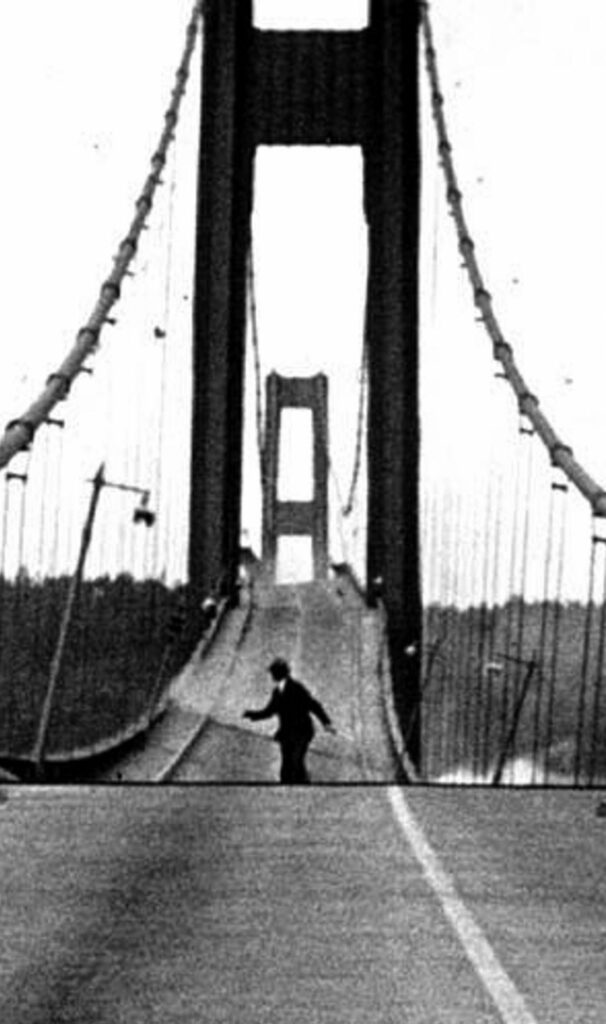

Can we successfully bridge our differences?

Historic Collection; Alamy Stock Photo

The company’s dominance has led to its market valuation of nearly $800 billion (U.S.), putting it within striking distance of $1 trillion (the four other big tech firms are the only other American companies to have reached this milestone). It’s not Facebook’s size on its own that should worry us but its size relative to its competition. Depending on the year and the research cited, Facebook and Alphabet together control between two-thirds and four-fifths of the digital advertising market. In other words, every other website out there that sells ads — there are tons of them — is competing for the remaining twenty to thirty cents of every advertising dollar being spent digitally.

As any first-year economics student knows, competition is a key factor in our market system, providing innovation and improvements in products and services, consumer choice, and generally lower prices. The challenge here is principally a market-access problem: by dint of first-mover advantage, acquisition of other popular tech companies (Instagram, WhatsApp, Oculus VR), questionable practices, and scale never before seen, Facebook has become a platform that stifles competition. (In October, Democrats on the U.S. House of Representatives Judiciary Committee issued recommendations that could hinder the company’s ability to acquire rivals in the future.)

Monopolistic behaviour isn’t only an economic problem; monopolies are bad for citizens’ political power, too. Less competition in the marketplace reduces one’s ability to negotiate pay, for instance, or find better work. More ominously, monopolies may contribute to the erosion of political freedom as the wealthy and powerful use their resources to make governments work for them and their corporate needs, rather than for the common good. As the Vanderbilt law professor Ganesh Sitaraman, writing about the United States, has put it, “When a small number of people wield unchecked power, they can oppress their workers and employees, crush the opportunity of any entrepreneur or small business, and even control the government.” We end up with an oligarchy or plutocracy, “in which freedom exists only for those with wealth and power.” And as Columbia Law School’s Tim Wu says, “When a concentrated private power has such control over what we see and hear, it has a power that rivals or exceeds that of elected government.”

So, on the first charge — that it’s monopolistic — is Facebook guilty? Yes. But similar charges have been levelled against other major tech companies. Congressional antitrust hearings have begun in Washington, the U.S. Justice Department has just sued Google, and the European Union’s tenacious commissioner for competition, Margrethe Vestager, has set her sights on some of these companies as well. (Microsoft, having had its brush with antitrust regulators in the late 1990s and early 2000s, seems to have learned its lesson and is keeping its nose clean, more or less.)

The second argument is that Facebook is cavalier with its users’ data — that it manipulates this vast treasure trove of data to perfect online behaviour modification. Using digital carrots and sticks, the platform induces its billions of users to react to emotional cues that benefit the company’s bottom line.

Anyone who has logged in recently knows the results of this manipulation: Ads seem to know exactly what we’re thinking , even before we do. We’re prompted to connect with long-forgotten friends and colleagues. The content in our feed magically matches our moods and mindset. Such manipulation and synchronicity are central to what has become known as “surveillance capitalism,” which the Queen’s University sociologist Vincent Mosco first identified in 2014. In this system, there’s a basic trade‑off: you get free services (News Feed, Messenger, Instagram, and so forth), while the tech company gets your data and, with it, the ability to monitor your behaviour, draw insights from it, and monetize it. The truly creepy part about surveillance capitalism, as Harvard’s Shoshanna Zuboff observes, is how it “unilaterally claims human experience as a free raw material”:

Although some of these data are applied to service improvement, the rest are declared as a proprietary behavioral surplus, fed into advanced manufacturing processes known as “machine intelligence,” and fabricated into prediction products that anticipate what you will do now, soon, and later. Finally, these prediction products are traded in a new kind of marketplace that I call behavioral futures markets. Surveillance capitalists have grown immensely wealthy from these trading operations, for many companies are willing to lay bets on our future behavior.

Perhaps you don’t mind being marketed this way. Perhaps the convenience of having products and services tailored to your behavioural patterns outweighs the creepiness of having a company know you better than you know yourself. Hundreds of millions — perhaps billions — of people don’t seem to mind at all. That’s if they explicitly understand the trade‑offs they’re making , which is a big if.

But what if these predictive practices go beyond the market for products and services and influence other behaviours? That’s exactly what happened in 2012 when Facebook played fast and loose with its users’ privacy, carrying out secret experiments and enabling the now defunct political consultancy Cambridge Analytica to build a “psychological warfare tool” in 2015, which was used to help elect Donald Trump a year later.

Again, Facebook is not the only company that is spending vast sums of money to keep you online to better understand your choices and consumer behaviour. Google does the same thing. So does Amazon, in its own way. Even Apple gleans enormous insights from the data it collects off its devices and off third-party apps sold on its App Store, although it doesn’t directly sell advertising. But it’s Facebook that has shown a willingness to allow the data it collects to be weaponized, as it were, to influence our most solemn democratic act: our vote. It should go without saying that that’s bad for democracy.

American lawmakers actually have robust tools with which to limit or stop Facebook from damaging our democracies in this manner. (Canadian legislators, for their part, have been very slow to develop digital rules of the road.) For example, rather than consider monopolistic behaviour through a narrow “consumer welfare” lens — which roughly equates lower prices with the absence of a monopoly — Congress might follow the so‑called New Brandeis approach to corporate monopolies, which considers a range of economic and political ends, and not merely consumer price, when determining whether a policy is anti-competitive. It could also beef up data protections, as the European Union has done with the General Data Protection Regulation, which went into effect in 2018.

Traditionally, Washington has preferred to leave the tech industry to regulate itself, reflecting its laissez-faire approach to commercial relations. But the United States has regulated industry more heavily in the past, and anxious or outraged citizens have a way of changing politicians’ minds. In fact, as the bipartisan case against Google demonstrates, reining in big tech — a twenty-first-century bout of trustbusting — may be one of the few moves that both Republicans and Democrats can support, though for different reasons.

That leaves the third charge against Facebook: that its platforms and algorithms are dividing us into irreconcilable camps whose lack of common ground is inimical to democratic participation. While such camps certainly exist on the site, it’s not at all clear that the platform itself is responsible for the cleavages. Nor is it clear what can be done about them.

Again, Facebook makes money by selling ads. Since you are the product, the longer you stick around, the greater the insights the company has about you, the more advertisers want to be there, and the more Facebook can charge for advertising. The primary ingredient that keeps you endlessly scrolling through your feed is the old publishing trick of serving you what you want to see, watch, or hear. There’s nothing particularly surprising or nefarious about that. Most of us certainly like to imagine ourselves as discerning consumers of a range of views and positions. But think of your actual news consumption. Do you frequently read, watch, or listen to sources that you disagree with? Probably not. Few Toronto Star readers also take the National Post. By and large, our media sources are mirrors that reflect ourselves back to us, feeding us stories that we use to buttress our already held beliefs. Facebook has merely perfected the recipe.

The second ingredient in the secret sauce is content that provokes strong emotional reactions. Unfortunately, posts that inflame negative emotions seems to work better at this than stories that arouse positive ones. This is one of the reasons the computer scientist Jaron Lanier, who has done pioneering work in virtual reality, has become a vocal critic of big tech in recent years, especially of social media. It’s why GQ magazine, which profiled Lanier in August, described him as “the conscience of Silicon Valley.”

Lanier’s 2018 book, Ten Arguments for Deleting Your Social Media Accounts Right Now, offered a stark warning. As he explained to New York magazine at the time, there’s a real power to negative emotions on social media:

Unfortunately there’s this asymmetry in human emotions where the negative emotions of fear and hatred and paranoia and resentment come up faster, more cheaply, and they’re harder to dispel than the positive emotions. So what happens is, every time there’s some positive motion in these networks, the negative reaction is actually more powerful.

Those reactions are powerful, in part, because of their habit-forming qualities. “People who are addicted to Twitter are like all addicts,” Lanier told GQ. “On the one hand miserable, and on the other hand very defensive about it and unwilling to blame Twitter.” Or, as the University of Toronto political scientist Ron Deibert puts it in his 2020 Massey Lectures, Reset, “You check your social media account, and it feels like a toxic mess, but you can’t help but swipe for more.” This quirk of human emotional behaviour is at the heart of the misinformation, the manipulation of information, and the sheer hatred that is all too frequently found on Facebook (Twitter, too, but it’s a comparatively tiny platform).

In a very real way, the platform succeeds when we become more polarized, when we find less common ground. In other words, Facebook succeeds when we fail. But is the company actually responsible for the speech that exists on its platforms? It argues that it isn’t — that it’s effectively like the phone lines of days past. Did we hold phone carriers responsible for the contents of the conversations that took place on them?

Critics counter that Facebook inappropriately benefits from section 230 of the U.S. Communications Decency Act, which states, “No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.” That has shielded the company from liability over its users’ posted content. If the company were regulated as a news organization, for instance, it would have to clean up its act quickly.

Neither argument is completely convincing , however. Yes, Facebook resembles the phone company in that it allows electronic communication over great distances, but, unlike phone conversations, much of that communication is public, not private. Yes, it disseminates information that may be called “news,” but, unlike traditional publishers, it creates virtually none of that information itself. The fact is that we’ve never seen an entity such as Facebook before. The company has provided the means for billions of people to act as publishers themselves, free to post and share whatever comes to their minds. It is both phone company and news organization — even as it is neither one of those things — based in one country but operating globally. Taking away section 230 protections from Facebook might help enforce existing laws on certain types of speech (hate speech, libel, incitement to violence), and that is probably an overdue correction. But lawmakers in the United States and elsewhere will still need to think of new forms of regulation for hybrid entities such as Facebook — as Mark Zuckerberg himself said earlier this year. (And he is not the only tech executive to warn of the absence of effective regulation in tech.)

Let’s be clear: the removal of section 230 protections and the addition of new regulations would do little to bridge our differences. After all, what law is being broken when anti-vaxxers spread their absurdities on Instagram or when QAnon followers (yes, they’re in Canada, too) disseminate their crazy, conspiratorial pseudo-ideas? Facebook is no more responsible for the belief that vaccines cause autism than the printing press was responsible for the belief among some northern European monks that the road to salvation lay outside Rome.

Of course, that hasn’t stopped Facebook veterans and other Silicon Valley VIPs from criticizing the company and the technology it has unleashed on the world. The Canadian-American venture capitalist and former Facebook senior executive Chamath Palihapitiya, for one, said in 2017, “I think we have created tools that are ripping apart the social fabric of how society works.” Facebook’s first president, the entrepreneur and philanthropist Sean Parker, who also created the peer-to-peer music program Napster, warned that same year, “It literally changes your relationship with society, with each other.”

I suppose we should be grateful that insiders are warning about the dangers of social media, but only Silicon Valley types have the hubris to assume that their work is uniquely able to destroy democratic societies. Democracy is indeed a very slender reed if a company founded in 2004 can destroy it by 2020. Yes, democratic societies have big problems: the loss of faith in the institutions that help us provide meaning and order in our lives; the sclerotic governments that are being asked to shoulder ever greater responsibilities; the strident individualism and intolerance on the right and the left; the inequitable distributions of income and imbalances in the accumulation of wealth. These woes, and more, have led to and are exacerbated by zero-sum politics that lacks a conception of the common good. But such grievances predate Facebook and would exist without it. Ernst Zundel and Jim Keegstra didn’t need social media to spread hate in Canada. The Warren Commission, which issued its final report twenty years before Mark Zuckerberg was born, spawned conspiracy after conspiracy. Social media didn’t create the cultural cleavages of the 1960s and ’70s, either. And Donald Trump didn’t need it to cast unfounded doubt on the 2020 election results, or the validity of mail-in ballots (though it certainly helped).

In fact, in spotlighting and amplifying these problems, Facebook may actually be providing a civic service by showing us the challenges we face in preserving democracy and allowing it to evolve in a digital world. Consider this well-known passage from John Stuart Mill:

The whole strength and value, then, of human judgment, depending on the one property, that it can be set right when it is wrong , reliance can be placed on it only when the means of setting it right are kept constantly at hand. In the case of any person whose judgment is really deserving of confidence, how has it become so? Because he has kept his mind open to criticism of his opinions and conduct. Because it has been his practice to listen to all that could be said against him; to profit by as much of it as was just, and expound to himself, and upon occasion to others, the fallacy of what was fallacious.

Reasoned judgment is essential in the pursuit of truth, just as it is essential to our conception of democracy. It is the duty of citizens “to form the truest opinions they can” and, quite literally, govern themselves accordingly. Beyond a desire to separate opinion from fact, reasoned judgment requires time and the tools to do so.

We need to determine how we’ll accomplish that in a digital world. Estimates are that within five years, we will create nearly 500 exabytes of data a day (that’s 500 billion gigabytes). In such a world, how are we supposed to separate fact from opinion? But this is an epistemological problem, not a technological one — and much less one of bad corporate behaviour. Put simply, the challenge is to determine what we know, how we know it, and how we know it to be true.

All revolutions challenge our view of what’s true by dismantling the status quo, by demolishing the familiar. The digital revolution in information and communication is no different. Facebook — and other successful platforms — have eliminated many of those arbiters who previously helped us define the truth. But there were always those who didn’t accept the legitimacy of sanctioned referees; they just lacked the means to meet easily, share their ideas easily, and discuss them easily and out in the open. Removing or limiting their means of communicating may once again make them invisible to us, but it will not make their ideas disappear.

So, by all means, boycott Facebook. Delete it. Encourage your friends to do the same (you may well feel better). Canadian diplomats and elected officials may wish to encourage their American counterparts to impose tougher antitrust and data-protection laws on the company in defence of our common interests. But let’s be honest with ourselves: our biggest democratic challenge isn’t Facebook. It is to find a newly acceptable standard of truth in an era of information expansion on a global scale.

Inspirations

John Stewart Mill

John W. Parker and Son, 1859

Jaron Lanier

Henry Holt and Company, 2018

Shoshana Zuboff

PublicAffairs, 2019

Ronald J. Deibert

House of Anansi, 2020

Dan Dunsky was executive producer of The Agenda with Steve Paikin, from 2006 to 2015, and is the founder of Dunsky Insight.