When Dave Eggers published The Circle (2013), a dystopian novel about a social media empire that had created a blandly totalitarian world of mindless clicking and total surveillance, run by rich young Silicon Valley overlords certain they were saving the world, he was met by savage pushback. In a common verdict, The New Republic called The Circle “overwrought paranoia” and “a bumbling and ill-formed satire on the invasive, monolithic beast he imagines the tech industry to be.”

Five years on, Eggers must be feeling vindicated. He was writing during the Obama years, when a better world seemed within reach to many of his readers. The landscape looks very different in light of allegations of Russian social media interference in U.S. electoral politics, and revelations that Cambridge Analytica acquired data from tens of millions of Facebook users without their permission on behalf of the Trump campaign, and was working for the Leave side in the Brexit referendum. Perhaps more significant, if less discussed, is that Facebook, Google, and Twitter all offered to embed in both the Trump and Clinton campaigns, though only Trump’s team accepted.

If fake news is zipping around the world and democracies are being gamed by trolls who include—but are hardly limited to—foreign actors, the time has come to question the platforms that make this not only possible but child’s play (sometimes literally). As tech critic Evgeny Morozov argued in an article in the Guardian last year, the current obsession with the Kremlin is often an excuse to avoid acknowledging a more pervasive problem closer to home: that, for decades, parties of the centre left and centre right have praised the genius of Silicon Valley and privatized telecommunications while taking a lax stance on anti-trust enforcement.

It’s in the context of this unprecedented experiment with democracy that Jaron Lanier’s Ten Arguments for Deleting Your Social Media Accounts Right Now makes the case that “We’re all lab animals now.” Lanier’s latest book is a continuation of ideas the maverick computer scientist was formulating long before his influential 2006 “Digital Maoism” manifesto, where he warned of “future social disasters that appear suddenly under the cover of technological utopianism.” He was particularly worried about the human tendency to surrender our identities to mass movements, which “tend to be mean, to designate official enemies, to be violent, and to discourage creative, rigorous thought.” Why, he wondered, should online collectivism, whether on the left or right, be any different?

Michael George Haddad

In his 2010 book, You are Not a Gadget, he expanded his critique of technology’s dehumanizing effects, from online mob rule to the trend toward obsessive reputation management to the harm done to creativity. He speculated about how corporations that give their (and our) services away would find a way to profit: “The only hope for social networking sites from a business point of view is for a magic formula to appear in which some method of violating privacy and dignity becomes acceptable.”

It did, and Ten Arguments explores what that magic formula has wrought. The book is an illuminating and surprisingly entertaining account of how an unfortunate confluence of math, psychology, and market incentives has allowed a level of manipulation that threatens our sanity, and perhaps civilization itself. Other recent books such as Franklin Foer’s World Without Mind or Siva Vaidhyanathan’s Antisocial Media offer more scholarly takes on the pernicious effects of surveillance capitalism, but Lanier’s gift is to make the subject matter approachable to the young people who have never known a different world.

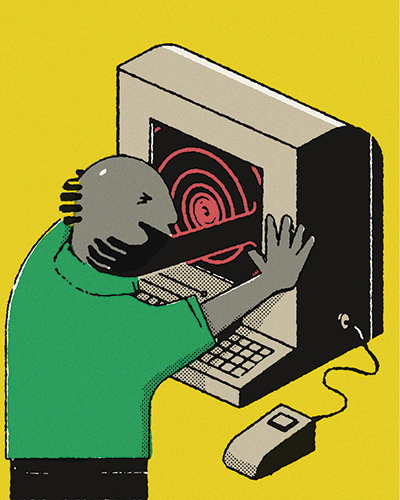

In the chapter titled “You are Losing Your Free Will,” he highlights how normal it has suddenly become for most people to “carry a little device called a smartphone on their person all the time that’s suitable for algorithmic behavior modification.” Constantly tracking and measuring us, these algorithms deliver individualized feedback designed to mould our choices and herd us into groups—the better to micro-target us. Without even realizing it, he argues, we are gradually being “hypnotized” by “technicians we can’t see, for purposes we don’t know.”

Other techies have lately started dishing up the details. In November 2017, Sean Parker, Facebook’s first president, told a conference that social-media creators knew from the start they were exploiting “a vulnerability in human psychology” by delivering “a little dopamine hit” at irregular intervals, through likes or comments, to generate addiction. Such Pavlovian conditioning is designed, Parker noted, “to consume as much of your time and conscious attention as possible.” This is because such corporations have no way of making money except by selling your attention. And they’ve done rather well by it: among the ten richest corporations in the world (by market capitalization, as of August), Alphabet (Google’s parent company) and Facebook sit at number 2 and 8, respectively.

Shortly after Parker went public, Chamath Palihapitiya, formerly Facebook’s VP for user growth (he left the company in 2011), made a remarkable admission to a student group: “The short-term, dopamine-driven feedback loops we’ve created are destroying how society works,” he said. “No civil discourse, no cooperation; misinformation, mistruth. And it’s not an American problem—this is not about Russian ads. It’s a global problem…It is eroding the core foundation of how people behave.” Citing a recent case in India where fake news of a child kidnapping plot spread on WhatsApp (owned by Facebook) and led to the lynching of seven people, he urged taking a “hard break” from these and other platforms, adding that he doesn’t allow his children to use “that shit,” or use it himself. (WhatsApp introduced sharing restrictions in India in July after a spate of such lynch mobs—not to mention threats of government regulation—though critics have questioned the company’s commitment to curtailing what is essentially the basis of their business model.)

Those little dopamine hits are only part of the story. As Lanier writes, what turns out to capture our attention most easily is negative feedback: fight-or-flight emotions such as anger and fear. The algorithms figuring out how to keep us clicking have shown that negativity gives the best bang for the buck, hence the ugliest voices are amplified. “Social media is biased, not to the Left or the Right, but downward,” he writes.

He is careful to say this didn’t happen on purpose. “There is no evil genius seated in a cubicle…deciding that making people feel bad is more ‘engaging’ and therefore more profitable…The prime directive to be engaging reinforces itself, and no one even notices that negative emotions are being amplified more than positive ones.” That may be true, but the humans in charge have taken note. Last year the Australian published details of a leaked Facebook presentation in which the corporation told advertisers they had figured out how to tell if teens felt “insecure” or “worthless,” and had data on their relationship status, locations, and body-image anxiety. We also know Facebook buys credit-card purchasing details to augment its databases, makes shadow profiles of people who have never opened an account, and has been pressing banks to allow it access to private customer financial records.

Lanier is more concerned with what happens on a deeper personal level, and he offers interesting insights into why social media interactions so often turn nasty, even among people who are natural allies. He speaks not only about tangible effects—increased depression, social isolation, extremism, and disinformation—but about what happens to our very character. In the chapter titled “Social Media is Making You Into an Asshole,” he pins part of the blame on the addict personality—if usage stats are any guide, that’s steadily more of us, though it’s easier to spot in others, “especially if you don’t like them”: the combination of grandiosity and insecurity, a fetish for exaggeration, eventually leading to a weird need to seek out suffering, and an eagerness to take offence and pick fights. But equally responsible is a social structure that triggers a pack mentality in which status and intrigues take precedence. “The only constant basis of friendship is shared antagonism toward other packs,” he writes. And because what we see is tailored by algorithms—even Google search results—we are cut off from what others see so completely that we lose our “theory of mind”: the ability to build a picture of what’s going on in other people’s heads. This undermines our capacity for empathy. “If you don’t see the dark ads, the ambient whispers, the cold-hearted memes, and the ridicule-filled customized feed that someone else sees, that person will just seem crazy to you.”

What do we become when we surrender our free will to a higher power that controls what we see and influences how we behave? What happens when we are granted regular access to the darkest parts of ourselves, what he calls our “inner troll”? The algorithms working to change our behaviour need only do so a little bit, he writes. “But small changes add up, like compound interest.”

Other researchers have done more work on where this relentless nudging can lead—sometimes to places we would never go by choice. Sociologist Zeynep Tufekci is the author of last year’s excellent Twitter and Tear Gas, which explored how movements such as the 2011 uprising in Egypt achieve swift success with the help of social media, but fall victim to the very ease of early gains; it turns out organizational resilience and true leadership can only be forged in time-honoured ways. Her book offers, among many things, a cogent primer on the workings of algorithms, but she only came upon YouTube’s bizarre tendencies more recently, while researching the 2016 U.S. election campaign. When she was clicking on rallies for Donald Trump, YouTube (owned by Google) swiftly led her to videos that featured white supremacist rants and Holocaust denials. Watching footage of Hillary Clinton and Bernie Sanders’s campaigns, she found that mainstream coverage on YouTube soon led her, via the site’s recommendations, to 9/11 conspiracy theories and the like.

This slide into extremism happened with even humdrum searches. When she watched a video on vegetarianism it was followed by another on veganism; one on jogging led to a video about ultra-marathons. “It seems as if you are never ‘hard core’ enough for YouTube’s recommendation algorithm,” she wrote in the New York Times in March. “It promotes, recommends, and disseminates videos in a manner that appears to constantly up the stakes. Given its billion or so users, YouTube may be one of the most powerful radicalizing instruments of the 21st century.”

She puts this down not to conspiracy theories but, as Lanier also contends, to a business model that uses algorithms to figure out what material will hold your attention so it may be sold to advertisers. As she puts it, “We’re building a dystopia just to make people click on ads.”

Lanier doesn’t dispute that social media has given much to some, particularly those on the margins who have discovered community, resources, and a voice through its platforms. Nor does he dismiss the benefits of the internet as a whole, merely this now-dominant manifestation. “It’s great that people can be connected,” he writes, “but why must they accept manipulation by a third party as the price of that connection?” He believes there are ways of harnessing the good without the bad, but this involves changing the business model—he favours a subscription system with subsidies for those who can’t afford to pay. In the meantime, because asking these corporations to reform themselves only hands them even more power to run our lives, he suggests taking the advice given to anyone in a toxic relationship: just leave.

Can journalists, writers, and media outlets afford to do that? It’s not just an attention economy, it’s a real economy, and the playing field is anything but level. According to the Canadian Media Concentration Research Project, Google and Facebook sucked up 72 percent of internet advertising in Canada in 2016, more than $3.9 billion. Yet those revenues are tax-exempt. By contrast FP Newspapers (publisher of the Winnipeg Free Press), a relatively small player in the Canadian newspaper industry, pays $17 million a year in total taxes. And whereas Canadian companies are restricted from deducting advertising dollars paid to foreign media as business expenses, there’s a loophole for foreign online advertising. (Google Canada, which alone counts for almost half of digital ad spending in this country, has been actively fighting reform.)

The European Union, alone in the world, has made encouraging strides in anti-trust enforcement and data privacy legislation, and is investigating the tech giants’ tax practices. So publishers and media organizations were optimistic about a proposed EU bill to force companies to honour copyright and remove or pay for unauthorized content. Their hope was in vain. The law was defeated in July after a ferocious six-month lobbying effort by Google and Facebook. “The real issue is Google’s market power,” said Lionel Bently, a law professor at the University of Cambridge. The very organizations that feed off news, music, and literary creation, not to mention our data, our thoughts, and our dreams—talk about cultural appropriation—can continue to siphon the profits for work they did not do while enjoying unfair advantages.

Social networks are also, it’s now clear, instrumental in blurring the distinction between true and false reporting. Fake news, like a mutant species that ends up killing off those it strives to imitate, turns out to perform much better on social media than honest reporting that relies on resource-intensive practices such as documentation and fact-checking. An MIT study found that false news is 70 percent more likely to be retweeted than verifiable reports of actual events. One would like to think that journalists can challenge the lies that breed whenever false news goes viral, but instead they are being forced to adapt by conforming to click-based values. Pity the newsroom reporters who must now watch their articles dispiritingly ranked in real time.

One of the finest books on the subject of journalism I have read in years is Seymour Hersh’s aptly titled memoir, Reporter. It’s the story of how a pugnacious Chicago-born son of a drycleaner and a housewife—both immigrants with little formal education—went on to break the story of the My Lai massacre in Vietnam, galvanizing opposition to that war, and gave generations of politicians, shady operators, and at times his own editors, stomach ulcers if not cause for resignation. It’s a well-told story of what ambitious, skillful, and resourced reporting on matters of genuine public interest can do, but in another way it’s a chronicle of what’s been lost.

I had lunch with Hersh in Washington a decade ago, downstairs from his cheerfully dingy office piled high with yellow legal pads, and found him as irascible, funny, brash, curious, and as generous to a younger writer as his new book would suggest. That was the year—2008—when it became clear serious journalism was heading for crisis. In the book’s introduction he reviews the fake news, contrived punditry, hyped-up and incomplete information, and false assertions spouted not only by social media but by newspapers, television, news sites, and sitting politicians.

Hersh’s memoir reads like a hardboiled cross between John le Carré and Raymond Chandler, and is full of instructive nuggets, such as the advice he learned as a rookie crime reporter: “If your mother says she loves you, check it out.” When he throws his typewriter through the window of the New York Times over a story of underworld corruption few wanted him to write, or makes an enemy for life of the Iraq war architect Dick Cheney, it’s hard not to feel cheered—something is right with the world. But when he talks about stories with “legs,” ones that keep on generating news down the line, it becomes hard to think of many current examples that aren’t salacious or spurious or pointlessly negative or pandering—the very sort of thing our new world thrives upon.

The problem is not only fake news, or no news, but that the kind of news flourishing in this environment can undermine citizen participation and political engagement, fuelling a sense of impotence and rage. Of the hundreds of news outlets that have shut down this decade as revenue has migrated online (and upwards to the big-money investors who are bleeding the corpses while they still can), most have been local sources. Their disappearance has left countless communities in the dark about their own affairs—a condition known as “news poverty.” The steep decline of local news means the coverage that remains is devoted to broader stories that appeal to a national or international audience, the sort of information most people can do little about. Local affairs, unless they are metaphors for widely shared concerns or are just weird enough to tap the zeitgeist, go unreported. Nobody is going to write about your local school board unless a moose barges into a meeting.

It’s hard to blame news agencies, or writers, for succumbing to the dictates of social media—most are barely surviving as it is. After all, even Hersh doesn’t hold out much hope for his own chances were he coming up today. But Lanier poses a thoughtful question that the two iconoclasts might agree upon: “What if listening to an inner voice or heeding a passion for ethics or beauty were to lead to more important work in the long term, even if it measured as less successful in the moment? What if deeply reaching a small number of people matters more than reaching everyone with nothing?”

In The Circle, Eggers portrays a neo-fascism in which power is invisible and citizens willingly give up their freedom for the online equivalent of a report card with gold stars. Spoiler alert: there is no happy ending. But I recently re-read a classic dystopian satire, Aldous Huxley’s Brave New World, that is a mite more hopeful. Published in 1932, it’s about a society scientifically engineered to produce happy, hard-working consumers who do as they are relentlessly told. Toward the end, the chief propaganda engineer begins to doubt the value of surrendering his gifts to triviality and is banished to an island for writing a poem in praise of solitude. Unexpectedly, he finds himself happy: “I feel,” he says, “as though I were just beginning to have something to write about.”

Deborah Campbell is the author of A Disappearance in Damascus, which won the Hilary Weston Writers’ Trust Prize and the Hubert Evans BC Book Prize. She is an assistant professor in the department of writing at the University of Victoria.